AI and its potential impact on schools and students

AI may have grown in popularity, particularly generative AI, but what place does it have in the classroom? And how should educators teach it responsibly?

By Michaela Ely

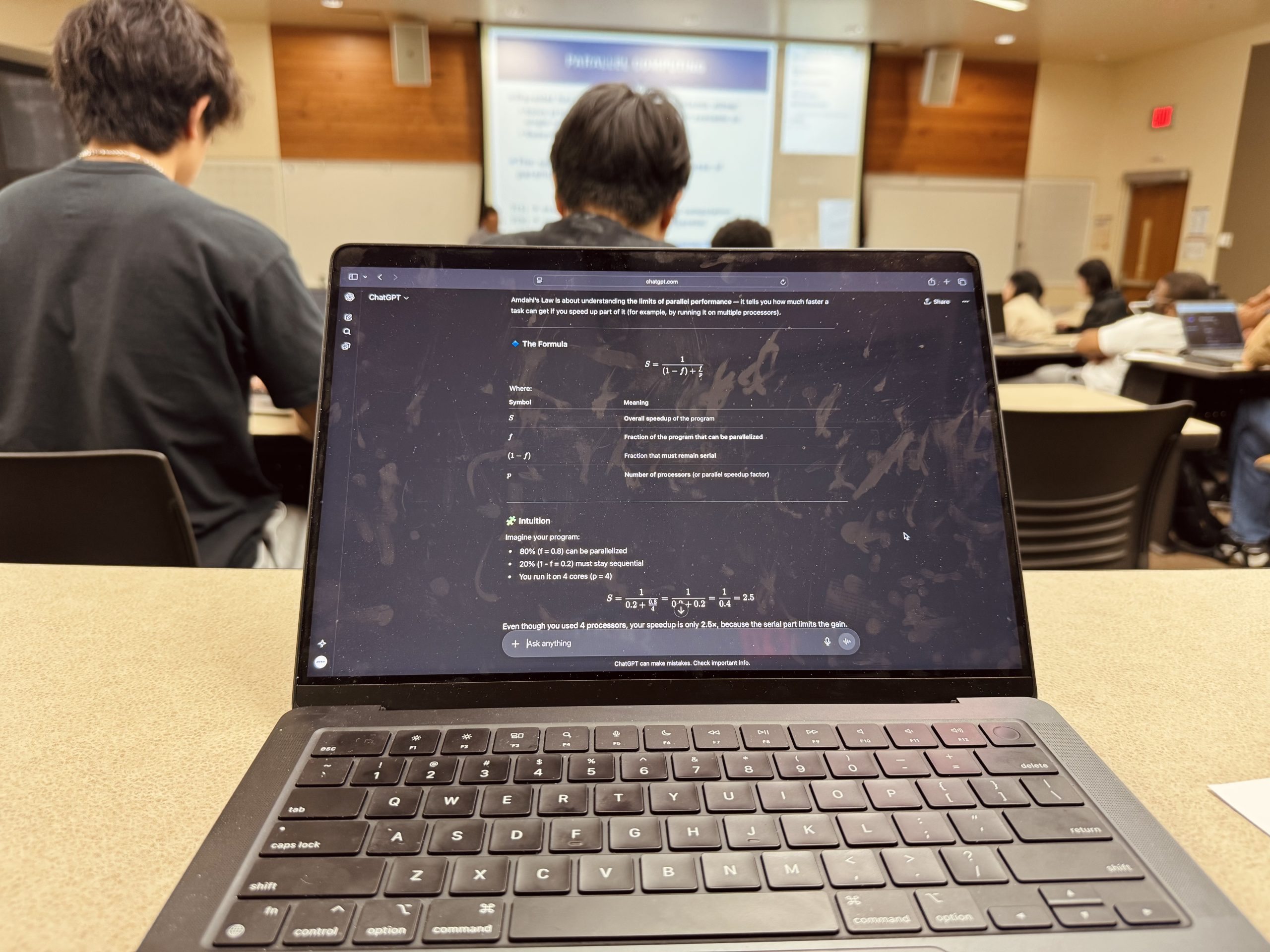

As AI platforms become more normalized in society with the development of Copilot, Gemini, ChatGPT and the soon to release UW Purple, educators must decide whether to adapt or resist this new technology.

I have often heard of AI being described solely as a tool, referencing how historically people were nervous around new technology such as the typewriter or the calculator. However, AI has the potential to be something far more dangerous if we leave it unchecked. This is especially true for students in K-12 education.

The use of generative AI platforms like ChatGPT have been found to reduce the cognitive load on students but also erode their critical thinking skills according to a study done in the journal, Computers in Human Behavior.

This study found that students who used language learning models (LLMs) like ChatGPT had weaker reasoning and less depth of content engagement. While this study was done with German university students, the implications can be applied to K-12 students as well, as their critical thinking skills are still developing.

However, as the field of education gets more difficult for educators to navigate due to new curriculum implementation, AI platforms can provide some assistance in decoding particular learning targets and how they may align with state standards in a way that is accessible to them and their students. When I think about AI, most of the dangers exist around AI as a creator, rather than using AI to assist.?

But should AI be seen as something similar to Google? LLMs are the most common form of AI that we see, and objectively, it should not be used as a search engine. LLMs and search engines both use algorithms, but the algorithms of LLMs draw upon previously observed knowledge and large amounts of human data sets rather than answering based off of an index of known websites.?

Using LLMs as a search engine can result in misinformation. Just as I double check sources for bias or misinformation, anything that AI spits out as a response should be double checked the same way.?

Another challenge that has appeared recently is the danger surrounding AI chatbots and the usage of these chatbots by children and teens.

The Federal Trade Commission began an inquiry on Sept. 11 into how these services may potentially harm teens and children. This comes after the parents of a teen boy who died by suicide in April sued OpenAI, alleging that ChatGPT played a significant role in their son’s death.

A survey by Common Sense Media found that 72 percent of teens have some sort of AI companion with 33 percent using it for social interaction.

I have a strong dislike for anything AI-related as a writer, future educator and climate conscious individual. These challenges make it very difficult for me to see how AI can be used responsibly in the classroom. However, AI will not be going anywhere in the near future. So how do we adapt?

Washington State’s Office of Superintendent of Public Instruction has provided guidelines for educators to follow in regards to AI usage in the classroom.

The framework provided emphasizes the role of educators as a moderator to AI usage in the classroom. These guidelines also look at AI through the lens of a tool or a way to assist, rather than a way to create. These kinds of guidelines must be implemented on a national level in order to protect all students.

While AI will continue to have various impacts in our society, we must learn to use and adapt to it responsibly. Pandora’s box of AI has opened and will not close, no matter how much some of us may wish for it.