The cloud’s bad week shows why “resilient by default” can’t wait

AWS’s Oct. 20 meltdown, Google Cloud’s June 12 outage and Azure’s Oct. 29 hiccup weren’t flukes; they’re the predictable costs of hyperscale centralization. If we keep building single-points-of-failure into our stacks, the next bad day will be worse.

By Syed Huzaifa Bin Afzal

There should be more use of multi cloud approaches for the apps. You don’t need to run your entire stack on two clouds. But moving just a couple of choke points like static files or your public status page to a separate provider can keep the lights on when everything else flickers.

On Oct. 20, 2025, an AWS disruption centered on the US-EAST-1 region rippled across the internet. Education platforms like Canvas, finance and government portals, consumer apps, and even parts of Amazon itself were impacted. By evening, AWS said services were restored, but the day laid bare just how much of the web runs through one region in northern Virginia, according to Ookla.

This wasn’t an isolated cloud hiccup. On June 12, Google Cloud experienced a multi-product incident that degraded core services and hit popular platforms like Spotify and Discord, according to CRN. Google’s status page and third-party analyses described elevated HTTP errors across underlying services before recovery later that day, according to Google Cloud Status.

On Oct. 29, 2025, Microsoft reported an Azure Front Door misconfiguration that cascaded into problems touching the Azure Portal, Entra ID (identity), App Service, SQL Database and more, a reminder that identity and edge/CDN layers are now just as “cloud critical” as compute, according to ThousandEyes.

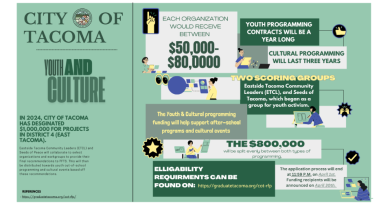

For universities and schools, the AWS outage took Canvas offline during class hours. When your learning management system is down, instruction halts.Multiple campuses documented the impact and the scramble to keep teaching plans afloat. The Tacoma Ledger previously covered these outages and highlighted the campus-wide impacts.

For businesses, the cost of outage is unforgiving. Industry surveys routinely peg losses at hundreds of thousands of dollars per hour on average and far higher in sectors like healthcare. Even small firms rack up wage losses and operational drag that compound beyond the minute-by-minute burn, according to E-N Computers.

The cloud outage pain lands on people, not just on servers. Students can’t download study materials, store owners watch paying customers leave or support teams scramble to post updates but can’teven log in to their own dashboards.

The cost isn’t just minutes of downtime. It’s missed deadlines, lost trust and a mess of follow-up work, refunds, make-up assignments, manual data entry and the awkward we’re sorry emails no one enjoys writing.

Here’s the uncomfortable truth; cloud concentration gives us phenomenal scale and velocity on good days, and synchronized failure on bad ones. Centralized control planes, widely shared regional dependencies and everyone piling into the same cheap and clos region turn routine bugs and config slips into internet-wide incidents. The incentives that optimized for speed and cost now externalize fragility among customers.

Backups in the same building aren’t enough. Keep a basic copy of your site or app in another region so you can flip over if the main one goes down.

If a handful of regions underpin critical national services, policymakers should make companies prove they can handle trouble, be honest about how much they rely on one place (disclosures) and regularly practice switching to backups for regulated sectors similar to banking.

Cloud isn’t going away, and it shouldn’t. It gives small teams superpowers and helps big institutions move faster. But concentration has a price: when a giant slips, we all stumble. The solution isn’t to abandon the cloud; it’s to stop pretending it never fails.

If your class, your shop or your app would grind to a halt during the next outage, that’s not just a provider problem; That’s a design choice.

Choose resilience on purpose. Make a Plan B that lives somewhere else. Practice using it. Offer a good enough mode when things break.